Artificial Intelligence (AI) is no longer just a futuristic concept — it’s embedded in how businesses operate, how governments make decisions, and how individuals interact with technology daily. Among AI’s many branches, Large Language Models (LLMs) like GPT-4, BERT, and LLaMA have particularly surged in popularity, providing solutions across customer service, content generation, and even strategic decision-making.

But here lies the hidden danger: bias.

Even the strongest LLMs, developed from enormous amounts of data and perfected through demanding processes, can inherit, enhance, or develop biases that could significantly affect decision-making processes.

In this blog, we’ll explore in depth:

- What does AI bias actually mean,

- How do LLMs create biases,

- Examples from real life,

- Dangers related to biased LLM-based decisions,

- How to identify, handle, and minimize AI biases.

Let’s unveil the truth behind the promise of “unbiased” AI.

What Is Bias in AI and LLMs?

Simply put, bias in AI is systematic error in data or algorithms that results in unequal outcomes — favoring one group over another, distorting reality, or repeatedly biasing results.

In the context of Large Language Models, bias arises when:

- The training data reflect historical biases,

- The model design over-emphasizes some patterns,

- The fine-tuning process accidentally injects a viewpoint.

Important takeaway:

Bias in LLMs is not deliberate — but it’s unavoidable unless actively controlled.

How Do LLMs Get Biased?

LLMs learn from vast amounts of publicly accessible content: books, blogs, forums, news articles, social media, and so on.

These sources, however, represent human society — with all its prejudice, stereotyping, and disparities.

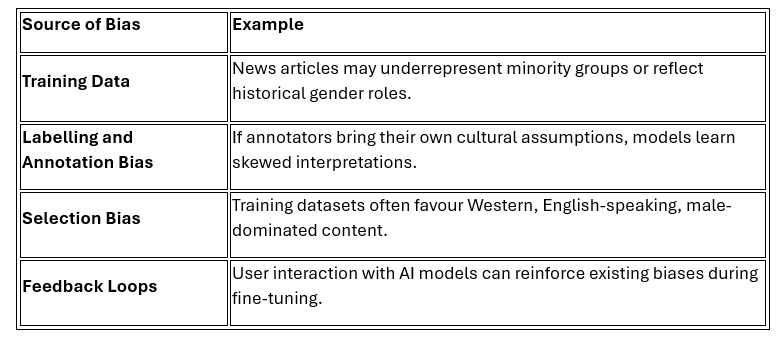

Here’s how bias can sneak in:

Types of Bias in LLMs

Knowledge of the various types of biases assists in early identification.

Some common types are:

- Representation Bias: Specific groups are not adequately represented in the training data.

- Stereotyping Bias: Attributing particular professions, behaviours, or traits to specific groups.

- Confirmation Bias: AI models have a tendency to reinforce common narratives, excluding minority views.

- Algorithmic Bias: Even the internal model parameters could unknowingly bias decisions.

Real-World Examples of Biased LLM Decision-Making

Even large tech corporations have fallen prey to bias-based controversies. Some examples stand out:

- Recruitment Tools Discriminating Against Women – A recruitment tool powered by AI, which learned from ten years of resumes, preferred male applicants — purely because past hiring behaviour favoured men.

- Biased Legal Predictions – Certain predictive policing software amplified racial biases and resulted in over-policing specific communities.

- Healthcare AI – In medicine, models have at times suggested fewer treatments for minority populations due to uneven historical healthcare access data.

Imagine trusting LLMs for strategic decisions if they’re drawing on similarly biased foundations!

Why Bias in LLM-Driven Decision Making Is Hazardous

Allowing LLMs to make decisions in the absence of bias guards can result in:

- Loss of Public Confidence: Consumers lose confidence when systems generate unbalanced or discriminatory results.

- Regulatory Risks: Regulatory agencies are intensely monitoring AI activity under GDPR, CCPA, and emerging AI regulations.

- Imbalanced Business Insights: Business choices made from erroneous outputs result in subpar performance or market alienation.

- Ethical Missteps: Brands tied to discriminatory outcomes experience enormous blowback, damaging years of building reputation.

Pro Tip: Bias in AI isn’t merely a technical headache — it’s a business risk.

How to Detect Bias in LLMs?

Bias can’t be eliminated completely, but it can be identified, measured, and controlled.

Following are methods to identify bias in LLM responses:

- Bias Benchmark Tests: Utilize StereoSet, WinoBias, or BOLD to quantitatively measure model biases.

- Diverse User Testing: Varying groups of users who interact with the model expose different biases.

- Explainable AI (XAI): Systems enabling humans to interpret why a model arrived at a conclusion can discover latent patterns.

- Audit Trails: Keeping detailed history of data origins and model updates allows backtracking bias sources.

Best Practices to Minimise Bias in LLM Decision Making

Here’s how businesses and AI teams can reduce bias hazards:

- Diversify Training Data

Actively securing data from many demographics, cultures, and opinions helps balance the representation.

- Implement Bias Detection Pipelines

Establish bias detection as a routine step through model training and updates.

- Human-in-the-Loop (HITL) Systems

Critical choices must have human intervention, particularly in delicate sectors such as finance, medicine, or law.

- Open Fine-Tuning

Publish and document fine-tuning practices transparently — transparency creates trust.

- Periodic Model Audits

Perform third-party audits on a regular basis to maintain ongoing fairness as models mature with actual usage.

- Use Ethical AI Frameworks

Comply with guidelines from organizations such as IEEE, UNESCO, or your regional AI governing bodies.

Is It Safe to Let LLMs Decide?

In brief: Yes, but with protections.

LLMs are remarkably powerful decision-support tools. They can process data at superhuman speeds, see patterns beyond human vision, and produce highly imaginative solutions.

Without active bias control, LLM-based decisions, though, can bring serious dangers.

Best approach?

- Treat LLMs as advisers, not as independent decision-makers. Utilize them to support human judgment, not substitute it.

- Bias in AI, particularly Large Language Models, is not a bug — it’s a representation of society’s nuances programmed into machine form.

- While LLMs provide unparalleled promise in accelerating decision-making, they need to be treated with care, openness, and constant monitoring.

- The future of accountable AI is not about removing bias completely — but about creating systems sensitive to it, capable of evolving, and dedicated to equity.

Before trusting LLMs with important decisions, know the biases lurking beneath the surface — and have a strategy to manage them.

Bias in AI isn’t merely a technical headache — it’s a business risk.